Usually, I use Power Pivot and VBA in Excel to measure Power Query performance by comparing refresh time.

But, I suppose Power BI Desktop refresh process may be different, therefore would be nice to have something that would allow measure time between start and end of refresh.

Unfortunately, we do not have VBA in Power BI Desktop, nor can trigger and monitor refresh of Power BI Desktop from another application. So, we have only M and queries.

For the experiment I’ve created a new Power BI Desktop file, three queries in it, and put queries in order Start-Delay-End

Start

= DateTime.LocalNow()

Delay

= Function.InvokeAfter(()=> DateTime.LocalNow(), #duration(0,0,0,3))

End

= DateTime.LocalNow()

“Delay” must make a 3-seconds delay. You may read about Function.InvokeAfter in old good post from Chris Webb.

The idea is naive – hope that Power BI Desktop will execute queries in the same order as in the list of queries.

If so – query “Start” will load start time, Delay will make a pause, then “End” will load time of the end of refresh.

However, by default, Power BI Desktop loads tables in parallel, to optimize load time.

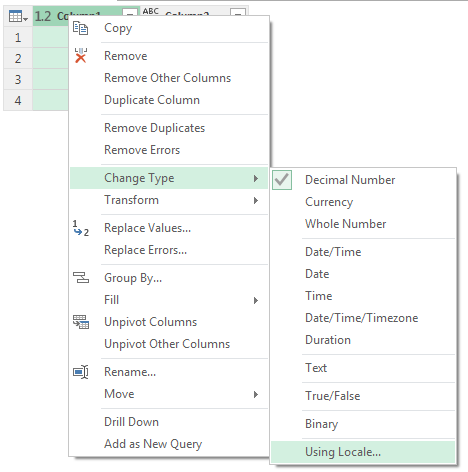

This property can be found in Options -> Current File ( Data Load ) -> Enable parallel loading of tables

This really works. I was receiving same time for Start and End tables while this property was enabled.

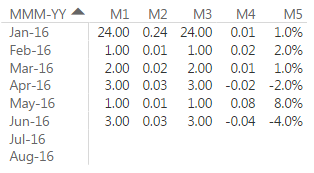

When I disabled it, I finally got desired difference between Start and End

Then I changed order of queries in the list of queries to check whether it impacts on execution order

Result shows that “Yes – order does matter“:

Of course, Power Query engine must be generating Execution plan when user press Refresh and then follows it. But in simple scenarios, when “Parallel loading of tables” is disabled, seems like Power BI Desktop follows order of queries from Query Editor.

I have no groups of queries, have no references between queries etc. It allowed me to check load time.

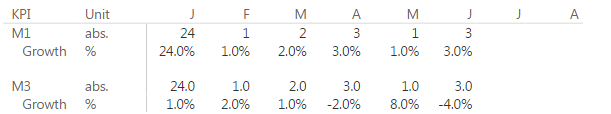

What about complex models

I tried to use same technique in more complex file – with groups of queries (but with no references).

I created query “Start” and placed it into the first group.

Similar for “End” query – but in the last group.

Result seems correct

Conclusion

Above is, of course, not a serious solution. Mainly, because you won’t want to disable parallel loading of tables, and won’t rely on order or queries.

Nevertheless, it would be good to have total refresh time directly in the model. It would allow to monitor refresh time of growing datasets.

More sophisticated way of query engine processing analysis is hidden in diagnostics of trace logs. You may read about this in several posts from Chris Webb here, here, and here.